When analyzing

In Oracle Database, a NULL value indicates that a particular column in a row has no data or an unknown value. Nulls can appear in columns of any data type that are not constrained by NOT NULL or PRIMARY KEY integrity rules. Oracle Database treats a character value with a length of zero as a NULL.

However, unlike some other languages, Oracle's NULL is not equivalent to the numeric value of zero. So, it is important to avoid using NULL to represent zero. Using zero clearly communicates that there is a quantity of none, whereas NULL can be ambiguous, which can cause unexpected results in calculations and logical operations, leading to errors. The behavior of NULLs depends on the context and requires operators and functions specifically designed to handle them.

In programming languages, conditional expressions refer to structures that create decision points in code. These structures enable the program to take different paths based on whether a certain condition is true or false. Conditional expressions typically incorporate an IF statement, often accompanied by ELSE, THEN, or equivalents.

While there are arguments against considering SQL as a programming language, it does offer a compatible structure capable of evaluating data during code execution. This capability allows the logical approach of the code to adapt based on certain values of data at runtime. In Oracle SQL, there are four different ways that can feature conditional expressions: CASE statement, as well as DECODE, NVL, and NULLIF functions. Let's get started!

The CASE Statement

The CASE statement evaluates an expression and, based on its value, evaluates one of a series of expressions as specified in the CASE statement. Here's a general syntax of a CASE statement:

CASEWHEN condition1 THEN result1WHEN condition2 THEN result2...ELSE default_resultEND

In the code lines above, the keyword CASE starts the conditional expression. Then WHEN condition THEN result represents a condition-expression pair. For example, when condition1 is true, the corresponding result1 is returned. You can have multiple WHEN clauses to handle different conditions. Oracle will evaluates the series of conditions and returns the corresponding value for the first true condition. If none of the conditions are true, the ELSE provides a default result. Lastly, the keyword END marks the end of the CASE statement.

To further illustrate, let's consider a table named tbl_moves shown in the figure below:

Table above lists Pokémon moves and their information. Here, pp stands for Power Point, which represents cost of Pokémon moves, and accuracy refers to success rates. Now suppose that you want to categorize the Pokémon moves depending on the ratio between accuracy and pp. To be more specific:

- Ratio is greater than 3.0: Highly efficient moves

- Ratio is between 2.0 and 3.0: Moderately efficient moves

- Ratio is less than 2.0: Low efficient moves

In Oracle SQL, you can achieve this as follows:

The query above essentially creates a new column (move_efficiency) that categorizes each move in the retrieved data. The column value is based on the calculated efficiency ratio (accuracy / pp) and can be one of the three categories mentioned earlier or Efficiency not determined.

The DECODE Function

The DECODE function serves as the original IF THEN ELSE statement, allowing you to map specific input values to desired output values based on predefined conditions. The basic syntax of the function is as follows:

DECODE(expression, search_value1, mapping_value1,search_value2, mapping_value2,...default_value)

In the code lines above, the required argument expression is the value you want to compare against the search values. This typically a column or operations based on columns. The search_valueN represents a series of values against which the expression is compared, whereas mapping_valueN signifies the corresponding result value if the expression matches the respective search_valueN.

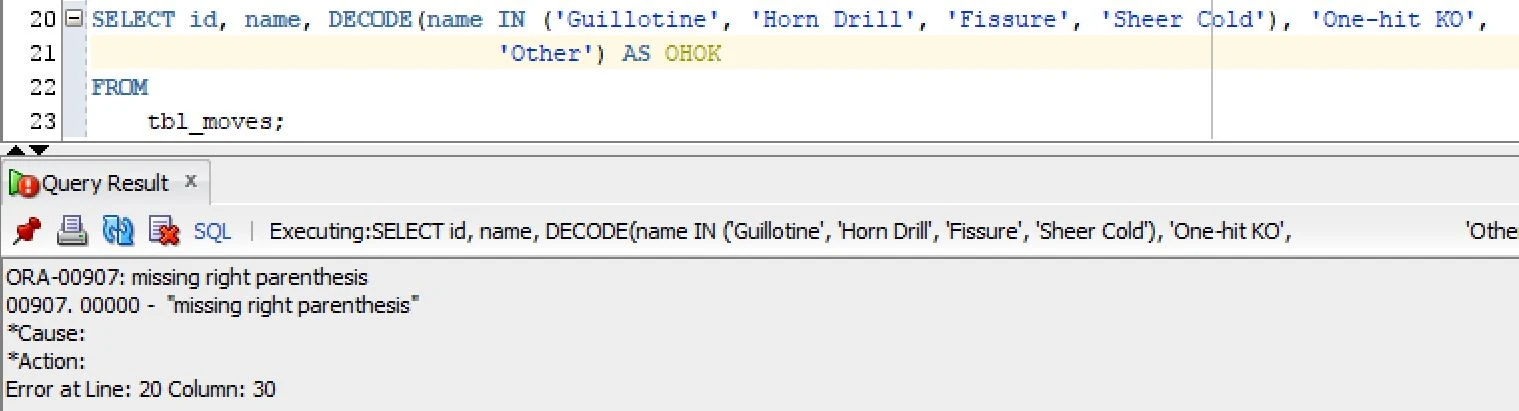

In the query above, I selected rows from tbl_moves by looking at the id and name column value as is and then use DECODE function to translate the values in name according to the search expressions in DECODE, which in this case looks at only four moves but could have easily been expanded to translate, or decode, all of the Pokémon moves.

Both DECODE function and CASE statement offer conditional logic in Oracle SQL, but they have some differences. One of the differences is that while DECODE can only handle equality, the CASE WHEN function can handle complex logic with various operators. In other words, the logic you can represent in DECODE function is only if the expression exactly matches to search expression; you cannot use operators like !=, >, or etc.

Another difference is that the DECODE function is limited to scalar data types, while CASE WHEN statement can work with both scalar and collection data types. For example, queries like below is not allowed:

Lastly, the number of arguments you can have for DECODE function is limited to 255, including the expression, search values, corresponding results, and default values. However, CASE WHEN statement doesn't have such limitations, as it is not a function but statement. Thus, CASE WHEN is generally considered the preferred approach due to its flexibility; the only reason I can find where DECODE is useful is to maintaining functionality of older queries, as CASE WHEN is introduced in version 8.1.6 while DECODE has been available in earlier Oracle versions.

The NVL Function

The NVL function is used to replace NULL value with a specified default value. It takes two arguments: the first is the expression that may contain NULL, and the second is the value to be returned if the expression is NULL.

NVL(expression, default_value)

For example, consider a query below:

In the fetched data shown above, we see that there could be NULL in the calculated move_efficiency column, depending on the availability in accuracy and/or pp column values. To avoid NULL, we can apply the NVL function as follows:

The NULLIF Function

In Oracle, the NULLIF function returns a NULL value if two expressions provided as arguments evaluate to the same value. Otherwise, it returns the first expression. Here's how it works:

NULLIF(expression1, expression2)

Both expression1 and expression2 are required, and they must be the same data type. The NULLIF function is useful for comparing multiple rows wherein an older and newer version of a particular value exist and you want to cull out those that are still not updated or perhaps already been updated. For example:

The tbl_my_pokemon has eight columns, including base_experience and current_experience. Now, suppose that you want to check if there is any updates on Pokémon experiences and calculate amount of experience gains. You can achieve this as follows:

In the query above, the NULLIF function compares current_experience with base_experience and returns NULL if the two column values are the same. Otherwise it returns the first argument, which is the current_experience. Then I subtracted the base_experience from the output of the function, which represents the amount of experience a Pokémon gained.

0 Comments